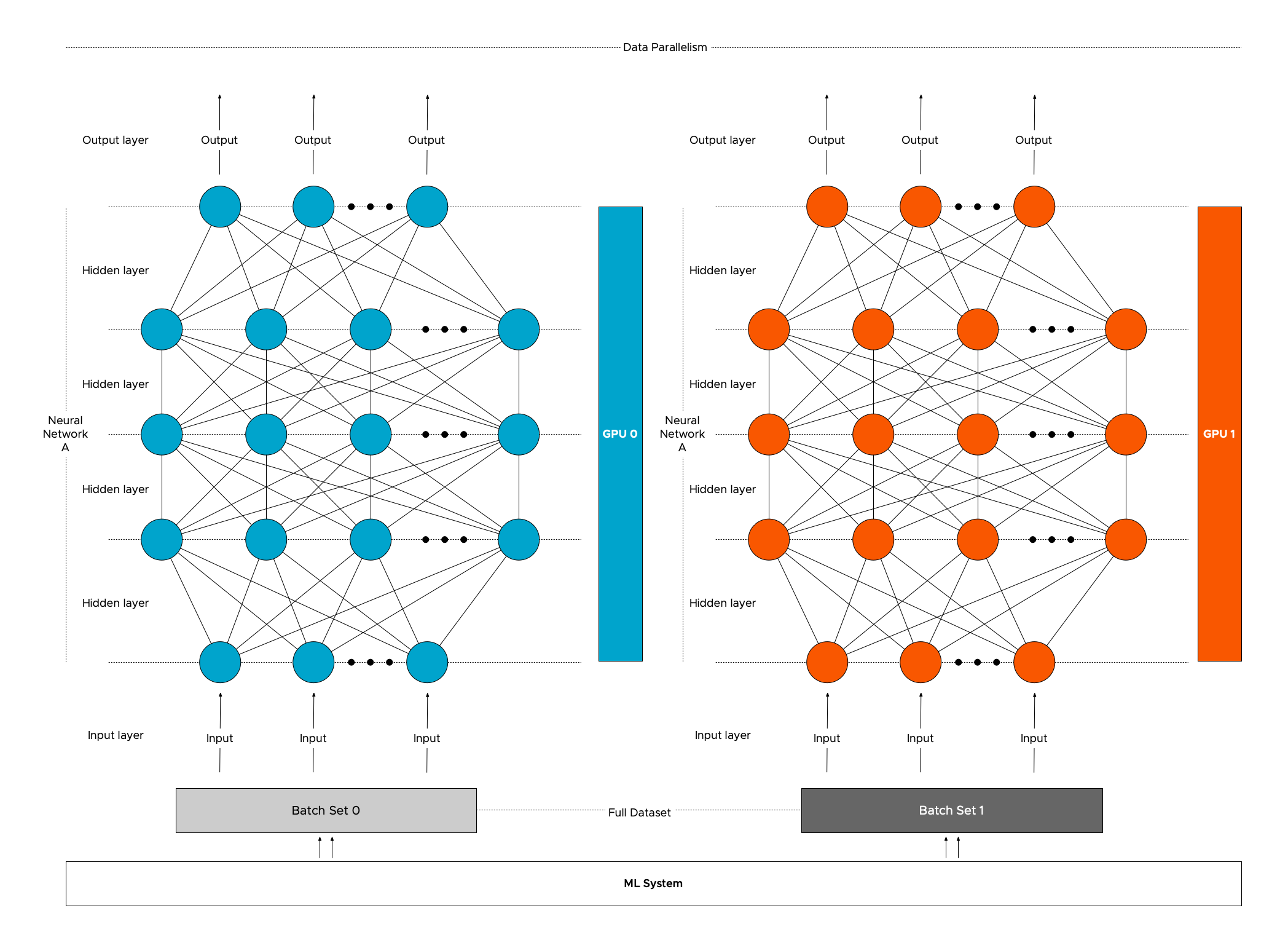

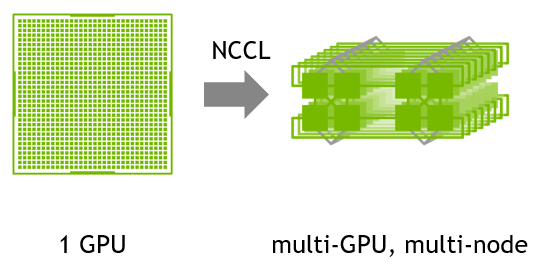

a. The strategy for multi-GPU implementation of DLMBIR on the Google... | Download Scientific Diagram

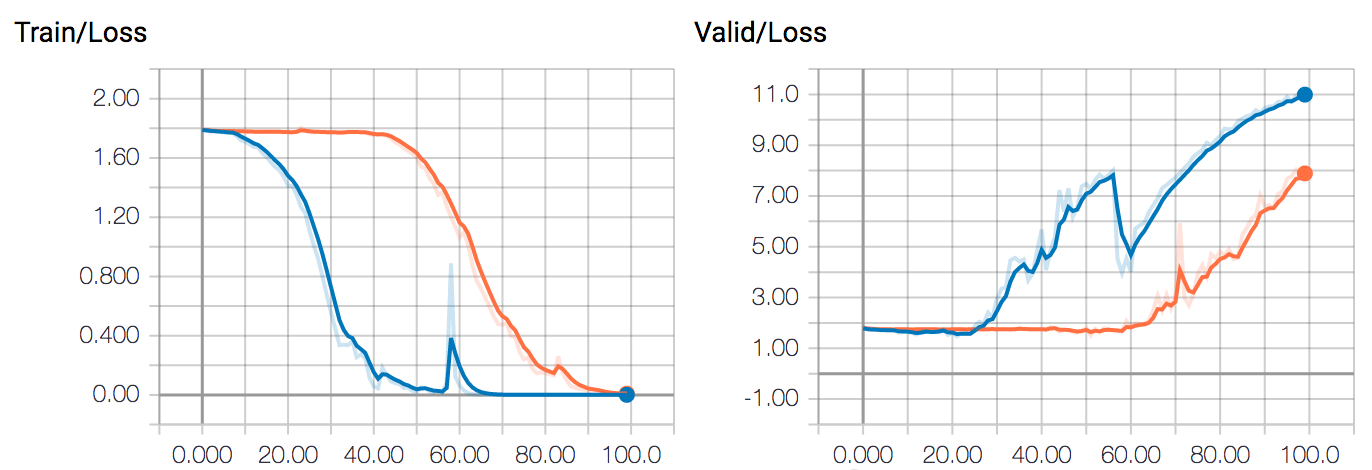

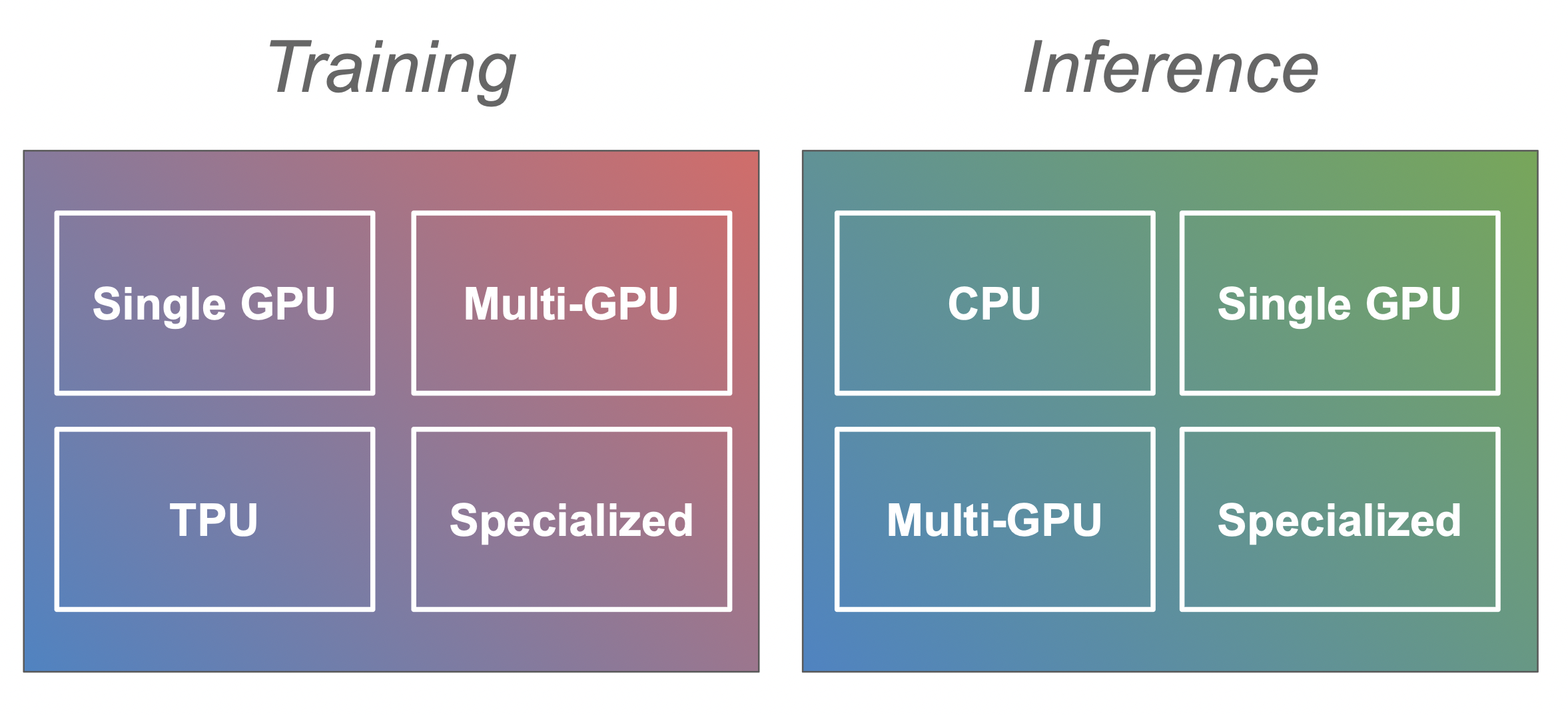

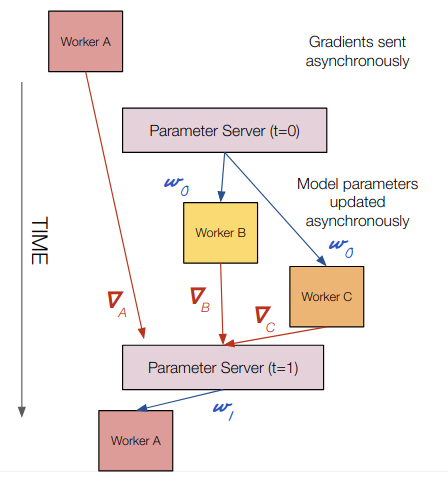

DeepSpeed: Accelerating large-scale model inference and training via system optimizations and compression - Microsoft Research

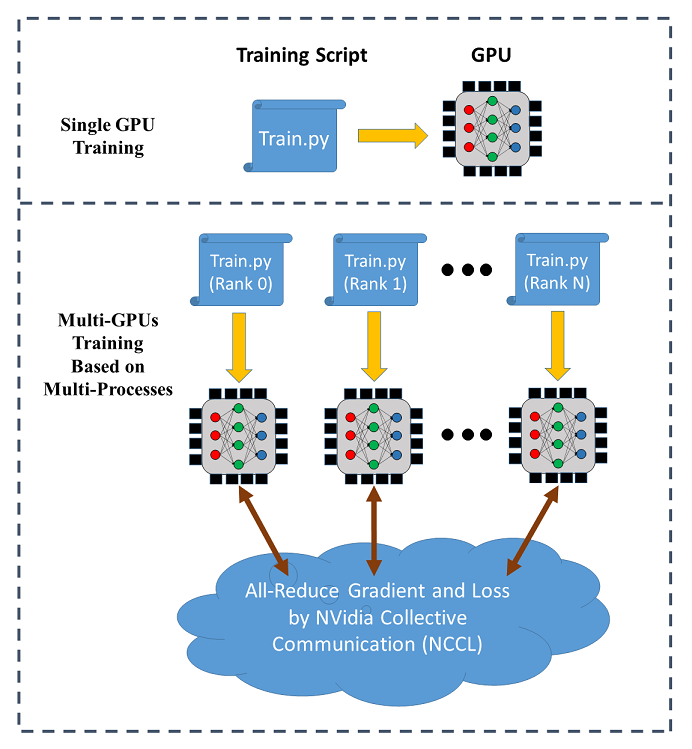

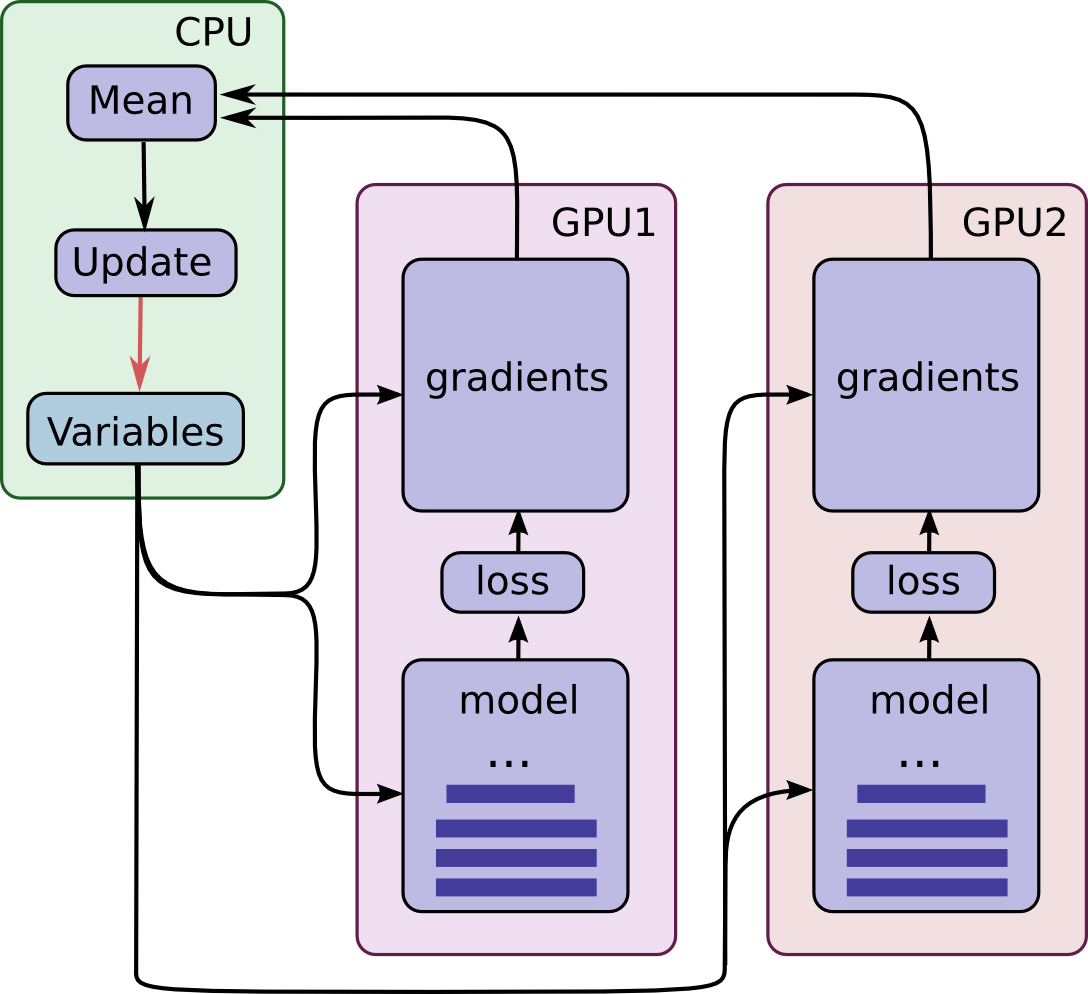

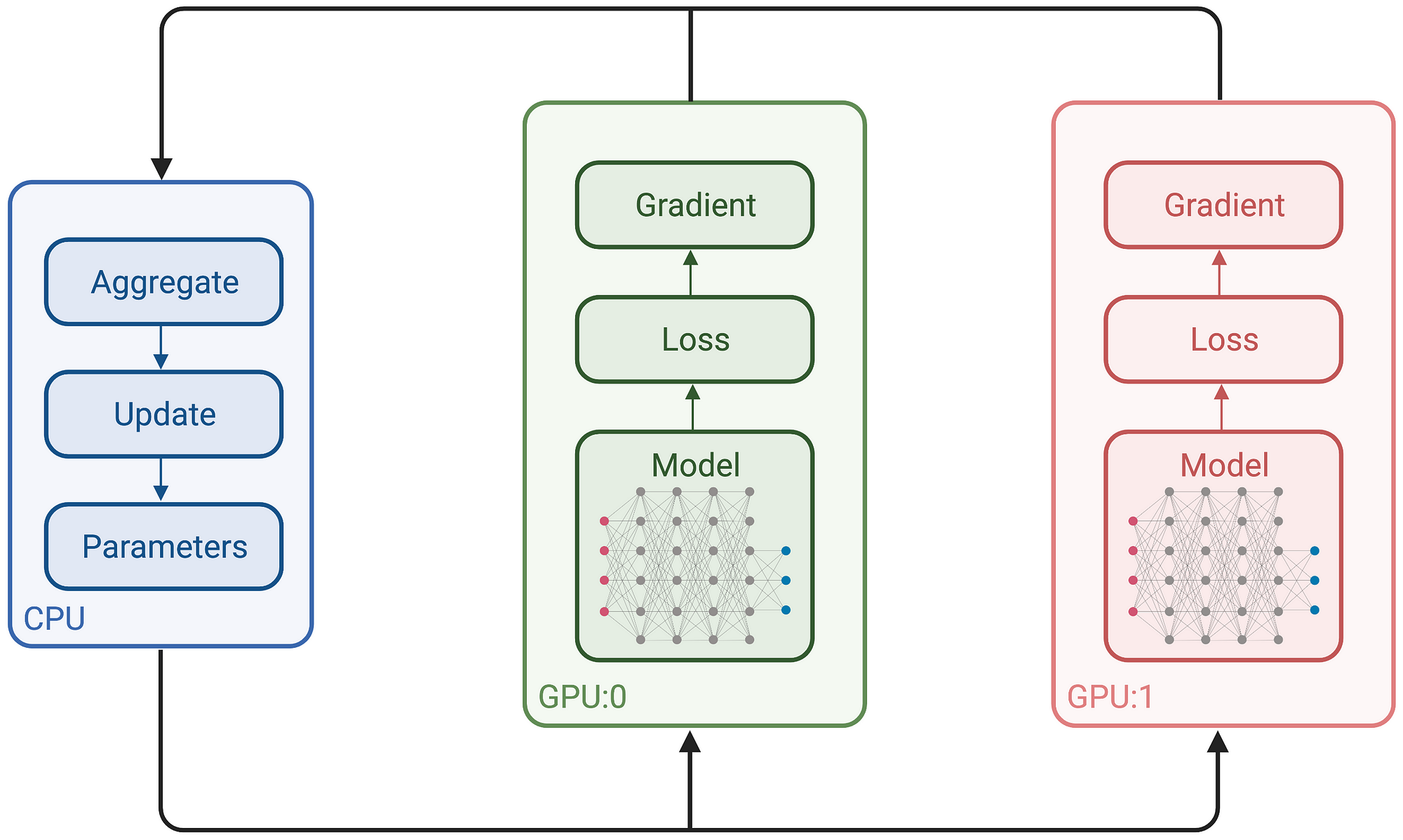

Multi-GPU training. Example using two GPUs, but scalable to all GPUs... | Download Scientific Diagram

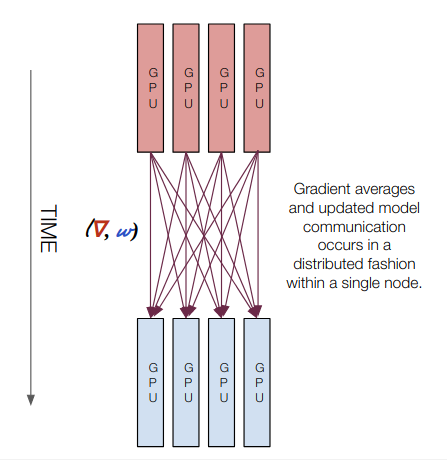

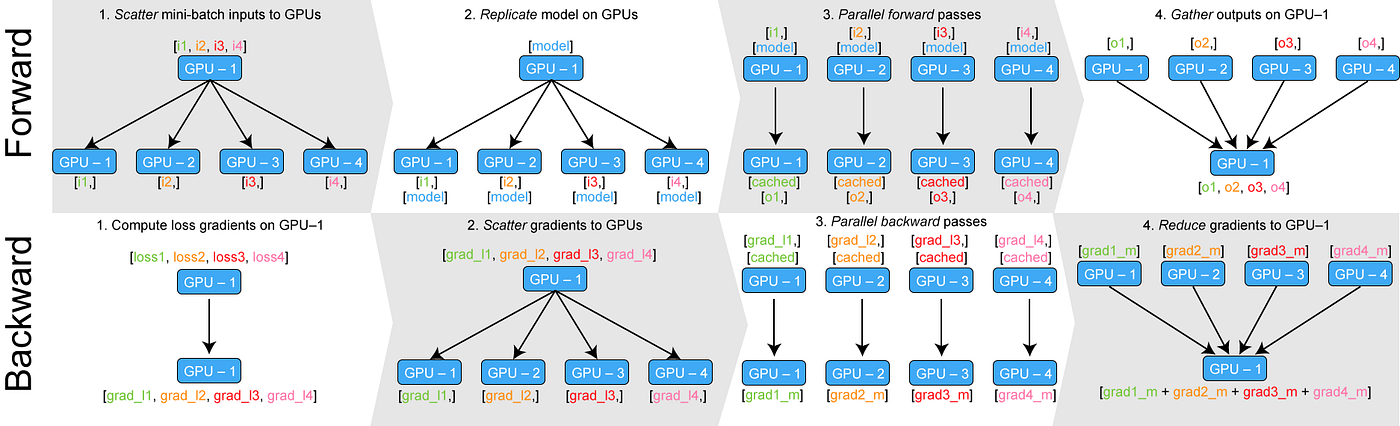

![PDF] Multi-GPU Training of ConvNets | Semantic Scholar PDF] Multi-GPU Training of ConvNets | Semantic Scholar](https://d3i71xaburhd42.cloudfront.net/4ce50b6d21e299d60e3ae2f46408ef2b6f29cdd4/4-Figure5-1.png)